- The Facts:Quantum physics has revealed astonishing discoveries, many of which challenge many long-held belief systems. It opens up discussions into metaphysical realities, and are thus labelled as mere interpretations due to the vastness of their implication.

- Reflect On:For a long time, authorities have suppressed ideas that are different, even if backed by evidence. Who is deciding what information gets out and is confirmed in the public domain? Who decides to establish something as ‘fact’ within the mainstream?

“I regard consciousness as fundamental. I regard matter as derivative from consciousness. We cannot get behind consciousness. Everything that we talk about, everything that we regard as existing, postulates consciousness.” – Max Planck, the originator of Quantum theory.

A recent article in Scientific American touched on quantum physics, and what it may reveal to us about the true nature of reality. The piece brings up the famous double slit experiment, one that’s been repeated for more than two hundred years. In the experiment, pieces of matter (photons, electrons etc…) are shot towards a screen that has two slits in it. On the other side of the screen, a video camera records where each piece of matter lands. When scientists close one slit, the camera will show us an expected pattern, but when both slits are open, an “interference pattern” emerges and they begin to act like waves, a representation of multiple possibilities. You can watch a visual demonstration of the experiment here.

Basically, it means each photon individually goes through both slits at the same time and interferes with itself, but it also goes through one slit, and it goes through the other, it also goes through neither of them. The single piece of matter becomes a “wave” of potentials, expressing itself as multiple possibilities, which is why we get the interference pattern. How can a single piece of matter exist and express itself in multiple states without any physical properties until it is measured or observed?

The article in Scientific American states, “some have even used it (the double slit experiment) to argue that the quantum world is influenced by human consciousness, giving our minds an agency and a place in the ontology of the universe. But does this simple experiment really make such a case?”

I stopped reading there for the simple fact that it’s not only this experiment but hundreds, if not thousands of other studies within the realms of quantum physics and parapsychology that clearly show that at some degree, our physical material reality is influenced by consciousness, in more ways than one, and this is not really trivial or a mere interpretation…

This is emphasized by a number of researchers who have conducted the experiment, as well as all of the founding people of quantum theory. A paper published in Physics Essays, for example, explains how the experiment has been used a number of times to explore the role of consciousness in shaping the nature of physical reality, it concluded that factors associated with consciousness “significantly” correlated in predicted ways with perturbations in the double-slit interference pattern. Again, here, scientists affected the results of the experiment by simply observing it.

The paper showed that meditators were able to collapse quantum systems at a distance through intention alone. The lead author of the study points out that a “5 sigma” result was able to give CERN the Nobel Prize in 2013 for finding the Higgs particle (which turned out not to be Higgs after all). In this study, they also received a 5 sigma result when testing meditators against non-meditators in collapsing the quantum wave function. This means that mental activity, the human mind, attention, and intention, which are a few labels under the umbrella of consciousness, compelled physical matter to act in a certain way.

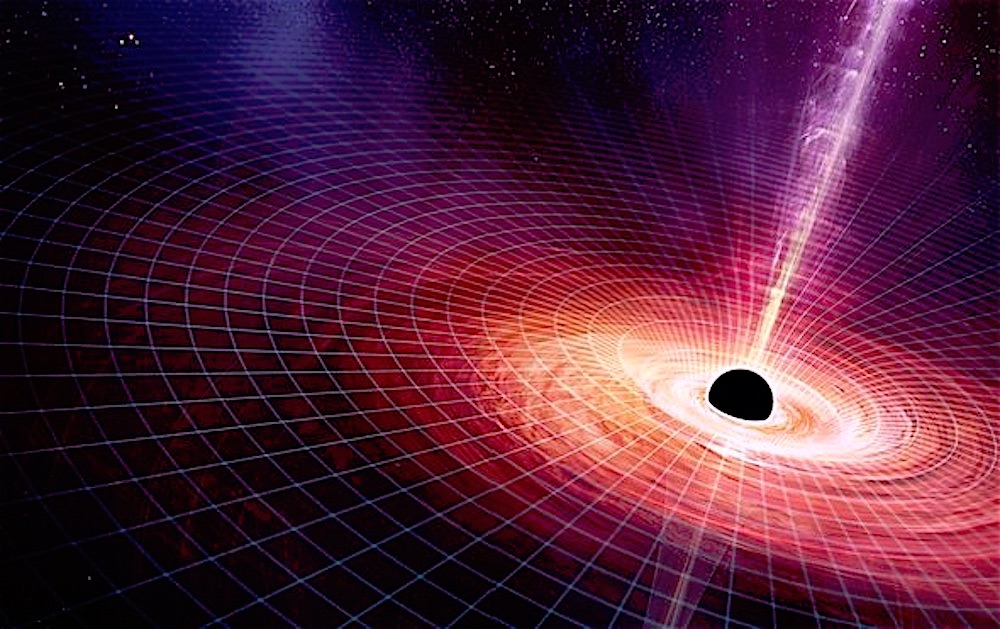

Perhaps the strongest point to illustrate the fact that consciousness and our physical material reality are intertwined are black budget special access programstudies that have been conducted by multiple governments worldwide for decades. In these programs, various phenomena are studied within the realms of quantum physics and parapsychology and have been confirmed, tested and used in the field. We’re talking about telepathy, remote viewing and much more. There are even classified documents pertaining to human beings with special abilities, who are able to alter physical material matter using their mind, as well as peer-reviewed research, here’s one example. There is also the health connection, theplacebo effect, and the mind-body connection which further prove that consciousness and physical material reality are intertwined. When it comes to parapsychology, the science behind it is stronger than the science we used to approve some of our medications…(source)

This is precisely why the American Institutes for Research concluded:

The statistical results of the studies examined are far beyond what is expected by chance. Arguments that these results could be due to methodological flaws in the experiments are soundly refuted. Effects of similar magnitude to those found in government-sponsored research at SRI and SAIC have been replicated at a number of laboratories across the world. Such consistency cannot be readily explained by claims of flaws or fraud.

It was not possible to formulate the laws of quantum mechanics in a fully consistent way without reference to consciousness. – Eugene Wigner, theoretical physicist and mathematician

There is also distant healing, and studies conducted showing what human attention can do to not just a piece of matter, but to another human body. If you want to learn more about this kind of thing, a great place to start is at The Institute of Noetic Sciences. I recently wrote about a study that found healing energy was able to be stored and treat cancer cells, you can read more about that here.

There is no doubt about it, consciousness does have an effect on our physical material world, what type of effect is not as well understood, but we know there is one and it shouldn’t really be called into question, especially by in an article published in Scientific American.

At the end of the nineteenth century, physicists discovered empirical phenomena that could not be explained by classical physics. This led to the development, during the 1920s and early 1930s, of a revolutionary new branch of physics called quantum mechanics (QM). QM has questioned the material foundations of the world by showing that atoms and subatomic particles are not really solid objects—they do not exist with certainty at definite spatial locations and definite times. Most importantly, QM explicitly introduced the mind into its basic conceptual structure since it was found that particles being observed and the observer—the physicist and the method used for observation—are linked. According to one interpretation of QM, this phenomenon implies that the consciousness of the observer is vital to the existence of the physical events being observed, and that mental events can affect the physical world. The results of recent experiments support this interpretation. These results suggest that the physical world is no longer the primary or sole component of reality, and that it cannot be fully understood without making reference to the mind. – Dr Gary Schwartz, Dr. Gary Schwartz, professor of psychology, medicine, neurology, psychiatry, and surgery at the University of Arizona

How Does This Apply To Our Lives & Our World In General?

This kind of thing has moved beyond just simple interpretation, and we also have examples from the black budget, like the STARGATE program, and real-world examples that what is discovered at the quantum scale is indeed important and relevant, and does apply in many cases to larger scales. People with ‘special abilities’ as mentioned above is one example, and technology that utilized quantum physics is another example, like the ones this Ex Lockheed executive describes, or this one. This type of stuff moved out of the theoretical realm a long time ago, yet again, it’s not really acknowledged. Another example would be over-unity energy, which utilized the non-physical properties of physical matter. You can read more and find out more information about that machine, here and here.

What we have today, is scientific dogma.

The modern scientific worldview is predominantly predicated on assumptions that are closely associated with classical physics. Materialism—the idea that matter is the only reality—is one of these assumptions. A related assumption is a reductionism, the notion that complex things can be understood by reducing them to the interactions of their parts, or to simpler or more fundamental things such as tiny material particles. During the 19th century, these assumptions narrowed, turned into dogmas, and coalesced into an ideological belief system that came to be known as “scientific materialism.” This belief system implies that the mind is nothing but the physical activity of the brain and that our thoughts cannot have any effect on our brains and bodies, our actions, and the physical world. – Lisa Miller, Ph.D., Columbia University.

So, why is this not acknowledge or established? That consciousness clearly has an effect on our physical material reality? Because, simply, we’re going against belief systems here. This and other types of discoveries bring into play and confirm a metaphysical reality, one that’s been ridiculed by many, especially authoritarian figures, for years.

When something questions our collective established beliefs, no matter how repeatable the results, it’s always going to be greeted with false claims and harsh reactions, we’re simply going through that transition now.

Despite the unrivalled empirical success of quantum theory, the very suggestion that it may be literally true as a description of nature is still greeted with cynicism, incomprehension and even anger. (T. Folger, “Quantum Shmantum”; Discover 22:37-43, 2001)

Today, it’s best to keep an open mind, as new findings are destroying what we previously thought to be true. The next step for science is taking a spiritual leap, because that’s what quantum physics is showing us, and it’s clearly far from a mere interpretation. Our thoughts, feelings, emotions, perceptions and more all influence physical reality. This is why it’s so important to focus on our own state of being, feeling good, and in the simplest form, just being a nice person.

The very fact that these findings have metaphysical and spiritual revelations is exactly what forces one to instantaneously throw the ‘pseudoscience’ label at it. Instead of examining and addressing the evidence, the skeptic uses ridicule to de-bunk something they do not believe in, sort of like what mainstream media tends to do these days quite a bit.

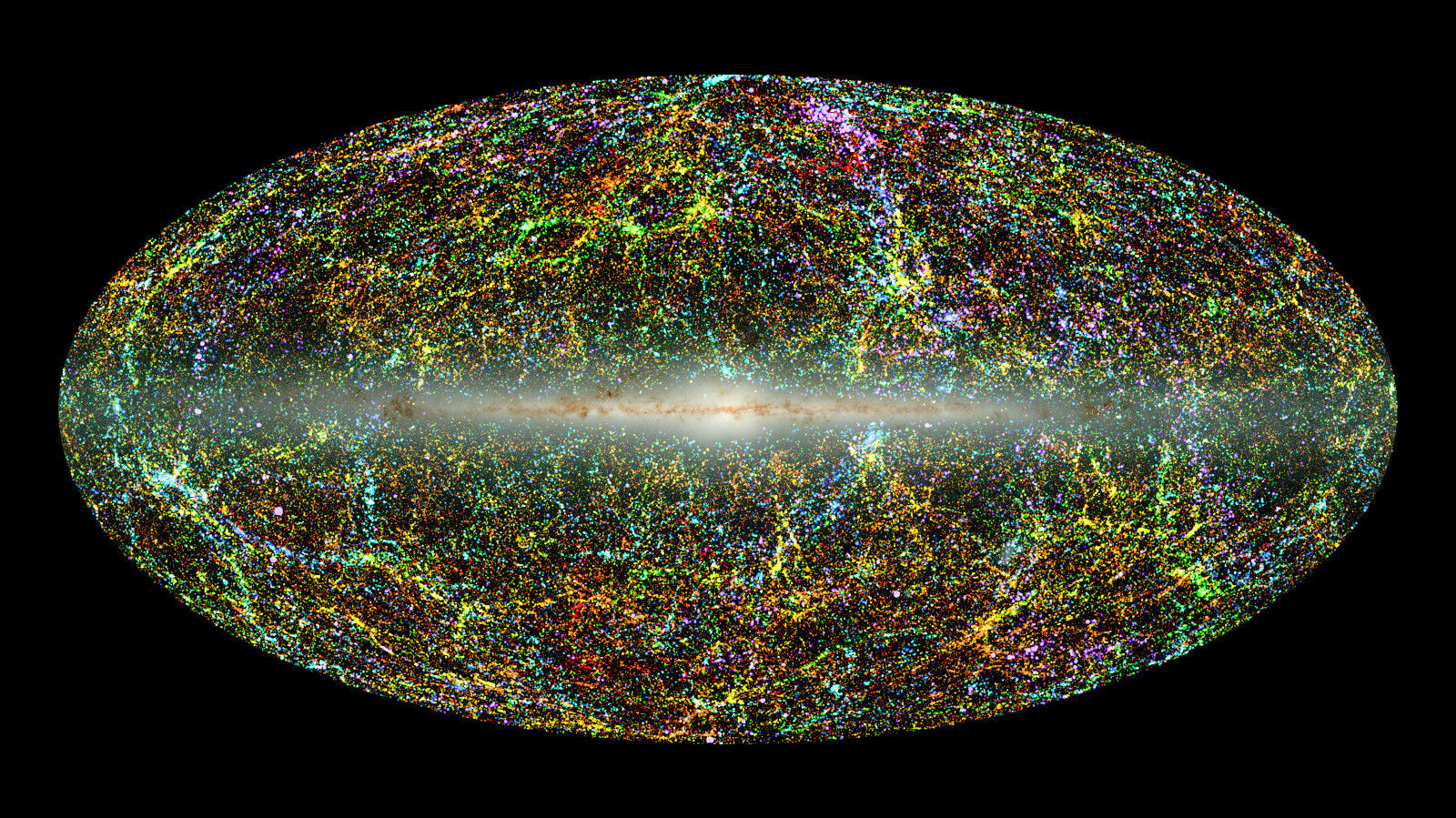

So why is this significant? Well, it’s significant because planet Earth is made up of a huge collection of billions of minds. If consciousness does have an effect on our physical material reality, that means in some sense, we are all co-creating our human experience here. We are responsible for the human experience and what happens the on the planet, because we are all, collectively, creating it.

That doesn’t mean that if we all collectively have a thought, it will manifest into existence right away, it. simply means mind influences matter in various ways that we don’t quite understand yet. If everybody thought the Earth was flat, would it actually be flat? These are the questions we are approaching as we move forward.

When our perception of reality changes, our reality begins to change. When we become aware of something, when we observe what is going on, and when we have paradigm-shifting revelations, these mental shifts bring about a physical change in our human experience. Even in our own individual lives, our emotional state, physical state, state of well being and how we perceive reality around us can also influence what type of human experience we create for ourselves. The experience can also change, depending on how you look at it.

Change the way you look at things, and the things you look at will change.

There is a very spiritual message that comes from quantum physics, and it’s not really an interpretation.

“Broadly speaking, although there are some differences, I think Buddhist philosophy and Quantum Mechanics can shake hands on their view of the world. We can see in these great examples the fruits of human thinking. Regardless of the admiration we feel for these great thinkers, we should not lose sight of the fact that they were human beings just as we are.

– The Dalai Lama (source)

As many of you reading this will know, 99.99 percent of an atom is empty space, but we’ve recently discovered that it’s actually not empty space, but is full of energy. This is not debatable and trivial, and we can now effectively use and harness that energy and turn it into electrical energy, which is exactly is discussed in this article if you go through the whole thing, it proves sufficient evidence. Read carefully.

Change Starts Within

When I think about this stuff, it really hits home that change does really start within, that we as human beings are co-creators, and together we can change this world any time we choose to do so. Metaphysics and spirituality represent the next scientific revolution, and it all boils down to humanity as a collective and as individuals finding our inner peace, losing our buttons so they can’t be pushed, and to just overall be good people.

Science can only take us so far, intuition, gut feelings, emotions and more will all be used to decipher truth more accurately in the future.

Today, we’ve lost our connection to spirituality, this connection was replaced long ago with belief systems have been given to us in the form of religion to the point where society is extremely separated when it comes to ‘what is.’ If we are all believing something different, and constantly arguing and conflicting instead of coming together and focusing on what we have in common to create a better world, then we have a problem….Especially if you think about the fact that we are all collectively co-creating.

We’ve been programmed to see the world a different way than what it actually is. We are living an illusion and quantum physics is one of many areas that can snap us out of that illusion if not restricted and conclusions labelled as mere interpretations.

I fail to realize how the spirituality emerging from quantum physics is a mere interpretation and see this as a tactic used by the elite to simply keep us in the same old world paradigm. I believe this is done deliberately.

Once we wake up and realize the power of human consciousness, we would be much more cautious of our thoughts, we would be much more focused on growing ourselves spirituality, and we would realize that greed, ego, fear and separation are completely useless and unnecessary.

Service to others is key, and it’s important that our planet and our ‘leadership’ here solely be focused on serving all of humanity. Right now, that’s not the case, but we are in the midst of a great change, one that has been taking place over a number of years, but on a cosmic scale, it’s happening in an instant. It’s interesting because the world is waking up to the illusions that have guided our actions, to the brainwashing, and to the false information. Our collective consciousness is shifting, and we are creating a new human experience

We interviewed Franco DeNicola about what is happening with the shift in consciousness. It turned out to be one of the deepest and most important information we pulled out within an interview.

By Arjun Walia

From Collective Evolution

In

In

Recent Comments